"What is X?" - Modeling the 2008-2009 Financial Systems Meltdown

We're about to start a detailed walkthrough of applying a "simple" statistical thermodynamic model to the Wall Street players in the 2007-2009 timeframe. The two kinds of information that I'll be joining together for this will be a description of Wall Street dynamics, based largely on

Chasing Goldman Sachs (see previous blogposts for link), and the two-state Ising thermodynamic model that I've been presenting over the past several posts.

The model first. The first and most important thing that we have to determine when we're applying a model is: What are the key variables and parameters, and what do they mean? To do this, we need to have an a priori understanding of the model itself - how it behaves, what it could possibly provide for us in terms of understanding (and even predicting) a situation.

The model that I've been taking us through is based on modeling a system of a fairly large number of "units," where each "unit" can be either "on" or "off"; or "active" or "inactive," depending on our point of view. The important thing here is: If we want to use this model, we're having to make the very dramatic simplification that all the "units" that we're modeling are in one of

only two possible states. This is an extreme simplification. The value of making this simplification will show up only if the model, at the end of making all the parameter assignment and turning the "model crank," we get some sort of interesting answer. (This is kind of like reading a mystery novel, we don't know how it will turn out until the end.)

In our case, the simplification that we're going to make is this:

1) We will make the "units" be all the active players on Wall Street that could possibly engage in some sort of highly leveraged buy-out or other fairly extreme (leveraged, risky) undertaking. This includes both buy-side and sell-side. It includes the banks, the hedge funds, and - essentially - all the players. The only question is: Were they involved in a "risky" (or "highly leveraged") transaction or not?

2) Then,

x - the only real "variable" in our system - represents the fraction of the total number of "units" (banks, hedge funds, whatever) that were involved in such transactions at a given time.

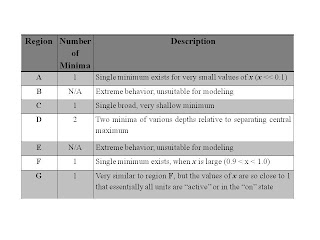

We start our walkthrough with being at

Point 1 of yesterday's blog, in Region

A. Region

A is the area where there is only one

free energy minimum. That means, there is only one "stable state." For Region

A, this minimum occurs for a relatively low value of

x, as is shown in the following

Figure 1.

We can see that in this

Figure 1 (characteristic for all of Region

A), the value of

x giving the

free energy minimum is about at

x=0.1.

So this doesn't mean that no banks, hedge funds, etc. were engaged in risky deals - just that a very small fraction of the overall number of banks, hedge funds, etc. were so involved. Goldman Sachs, for example, took some early strategic steps that were risky (highly leveraged), but it distributed its risk by taking on diverse plays.

If we're going to apply this model, we now need to identify the meaning of the two parameters involved;

e1 and

a=e2/e1.

When we look at the phase space diagrams of previous posts, we see that there are two parameters. The one across the top (ranging from 1.5 to 8.5) is

e1. (I'm using a simplified notation here compared to the Greek letters and subscripting in the equations themselves.)

e1 represents an "activation energy," or the "energy cost" (

enthalpy per unit) of having a unit in an active state.

Let's have a quick review of basic thermodynamic principles. A system is "at equilibrium" when the

free energy at a minimum. (In the

Figure 1 for this post, this occurs when

x is about 0.1.)

Free energy is

enthalpy minus (a constant times)

entropy. (We work with the "reduced"

free energy for all of our discussions, where constants and other terms have been divided out, subtracted out, or otherwise normalized; so all future references to

enthalpy will really mean a "reduced"

enthalpy where various constants have been worked to give a simpler, cleaner equation.)

As per our first equation, several blogposts ago, we really have two

enthalpy terms; one is an

enthalpy-per-active unit (linear in x), and the other is an

interaction energy term, which is an "interaction energy" times x-squared.

The

enthalpy-per-active-unit is

e1.

If we're going to make this model work, we need to figure out what this means.

Suppose that we took our basic

free energy equation, and pretended that there was no

enthalpy at all; there was no extra "energy" put into the system when the various units were "on" or "off." Then the

free energy would be at a minimum when the

entropy was at a maximum, and this occurs when

x=0.5. (The

entropy equation here is symmetric;

entropy = x(ln(x)+(1-x)ln(1-x).

What happens when we introduce the

enthalpy terms is that we "skew" the

free energy minimum to one side or another. Region

A corresponds to the area where the

interaction energy is low, so for the moment, let's pretend that it doesn't exist. We'll focus on the physical meaning of the parameter

e1. It is to be something that will shift the free energy minimum to the left, or make the value of

x that produces a

free energy minimum to be smaller. (In

Figure 1, the

free energy is at a minimum when

x=0.1 instead of

0.5.)

The

enthalpy-per-unit term,

e1, associated with Region

A (and in fact all of

Figure 1) is a positive term. It means that there is an "energy cost" to having a unit in an active state.

We're going to interpret this cost as risk. We will say that

e1 models the risk for a unit (a bank, a hedge fund, etc.) to be involved in a very leveraged transaction. The risk can be small (

e1=2) or large (

e1=7). Either way, we get a single minimum if there is no "interaction" energy; this minimum is for a low value of

x, meaning that relatively few units are "on" or are involved in risky transactions.

Now we come to the "crux of the biscuit." What does the other parameter,

a, mean? This is our

interaction-energy term; it multiplies

x-squared. We get the "interesting behavior" in Region

D (the middle, pink region of the phase space diagram of yesterday's post). And in order to get the system to move from what we'd think of as a "logical, sane" equilibrium state - one in which relatively few units are involved in risky transactions - to the equilibrium state of Regions F or G (where most of the units are "on") - something has to happen. Something has to "force" more units to take on more "risk."

This "something" has to do with how the units interact with each other.

What would this mean in Wall Street social and political dynamics? How about peer pressure? Think about it.

Interaction-energy = peer pressure. This is how banks and other institutions - who knew that what they were doing was not only risky, but downright foolhardy - were moving into these highly leveraged situations.

In terms of

Figure 1 of the previous post, the whole system of Wall Street financial institutions were going from Point

1 to Point

2 to Point

3. By the time the system was in Point

3, almost all units were involved in risky transactions (I'll show the

free energy diagram in the next posting), and the "free energy minimum" was VERY deep. And there was no "alternative." There was only a

single free energy minimum. In other words,

the whole system was trapped in a very risky situation.